With potentially inches of rain in the forecast in the near future it may be several weeks or more before any significant new content is added to the site. This time will likely be used implementing new features, so if you have any ideas now is the time to let me know! Email me at sjg@thesjg.com

Victoria’s Secret

After reading on the BHMBA forums that local athlete extraordinare Gary H. and others had marked the Victoria’s Secret loop near Victoria Lake, Sam R. and I decided to head out and see if we could find our way around. It was a pretty good ride (despite beginning with a good climb) and had excellent views. I have already cleaned up the log and recorded quite a few audio notes during the ride, so this should make it onto the website, including details on the (few) tricky spots to navigate fairly shortly.

Log: http://connect.garmin.com/activity/86306860

Mileage (car): 0 (did not drive)

Mileage (bike): 10mi

Website changes and a new blog

Yesterday and today I have been working on a number of non-functional website changes, these include some static content such as the About page for interested parties as well as the Legal and Advertising pages. Also new is this blog, which is intended to bring interested parties both a better understanding of the what, why and how this site operates as well as relevant trail news and happenings from around the Black Hills.

This blog is brand new as of today, but I have already back-dated a couple of posts and may back-date more in the future in order to provide some semblance of linearity

Trail 40L recon.

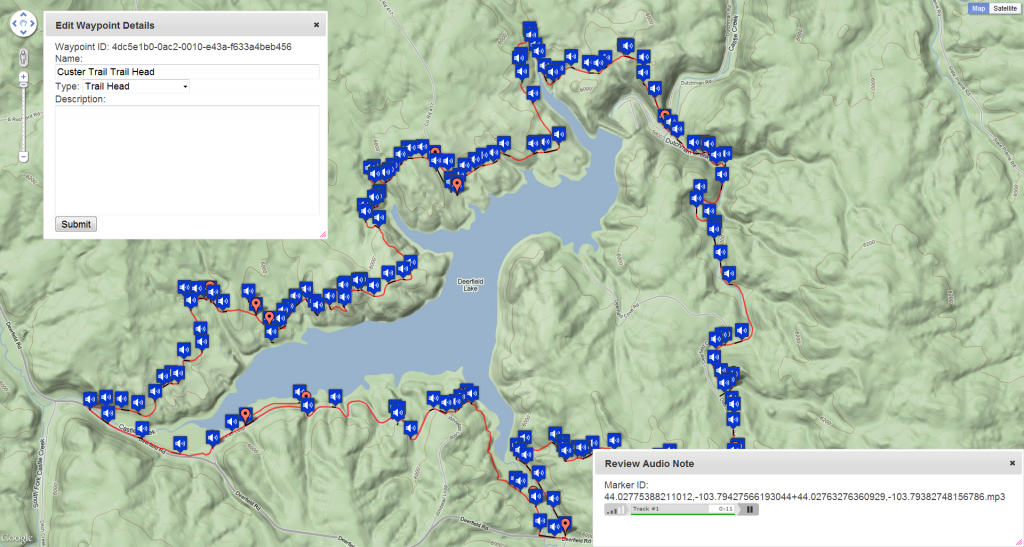

Made my way out to Deerfield Lake today to ride the Deerfield Lake Loop, also known as Trail 40L. Based on the Forest Service literature I expected to do the loop twice in about 4 hours, once clockwise and once counter-clockwise. The Forest Service put the distance at 10 miles and calls the terrain moderate, in reality the route is more like 12 miles and conservatively 1800+ feet of elevation gain. I ended up doing one slow loop clockwise that clocked in around 3:20, and this seemed like a good direction choice, while there are very steep sections in both directions the clockwise direction means you get to make up a lot of your elevation gain on double track with a relatively easy grade.

This was the first ride I have done annotating my progress with a digital audio recorder attached to the shoulder strap of my CamelBak, post-ride I rejiggered the website backend to show all of these audio annotations as a map overlay. This way I can reference my audio notes while writing track and waypoint descriptions, all in all this is working quite splendidly so far.

Log: http://connect.garmin.com/activity/83896760

Mileage (car): 100

Mileage (bike): 14

New gear: OLYMPUS VN-8100PC USB PC Interface Digital Voice Recorder ($60)

New Jeramy trail at Buzzards Roost

On the first Monday in May I decided to map the new trail at the Buzzards Roost Trail Network that was recently completed by the BHMBA to satisfy a standing grant. Riding with a broken hand I managed to get a good log and after conferring with Dan S. put the new trail up on PahaSapaTrails.com with a description with the name Jeramy.

Log: http://connect.garmin.com/activity/83098488

Mileage (car): 25

Mileage (bike): 5.65

In-language commenting

Lua is clever and uses –[[ … –]] for multi-line comments. The usefulness in this scheme is that the syntax used for closing a multi-line comment IS a comment, and no syntax error results if you comment out the opener by adding an extra -, which re-enables the commented out section. The part that I don’t like is how single-line comments are specified, with –. Just IMO, FWIW, etc., I don’t think the same characters used for basic operators should be re-used for something like a comment. Really, shouldn’t a = a — 2 result in a-4?

The same in C99/C++, how does using // to specify a comment really make sense? a //= 2 ? a ///= 2 ? /* */ -style comments are better, I suppose, because they are an obvious nop.

Ideally I think we would be using something that allows for the abuse that Lua’s multi-line comments gives us, without overloading any of the basic operators provided by the language. “ perhaps?

“ Comment

“

Multi-line comment

“

“ “

“ Active multi-line comment?

a //= 37;

“

Maybe, I’m not sure.

There are also languages that allow comments to effectively serve as documentation that is preserved (potentially) at runtime. For example, Python assumes unassigned strings immediately inside a class or function definition are to serve as a documentation block for that section. These documentation blocks can be accessed through runtime introspection, or used with appropriate tooling to generate documentation. This is a great approach from my experience, and perhaps -all- comments should be given the opportunity (optionally) to persist alongside the code for which they are intended.

If one is to take this approach, how does one rectify comment specification with static string initialization? Especially with regards to whitespace (esp. newline) handling? How does one specify to what block of code a comment refers when not at the beginning of a closure of some sort? Is there even any point in preserving these comments for any sort of runtime introspection or should the goal be simply the production of documentation, ala Literate Programming?

JSONRequest over Flash

I just hacked up JSONRequest support in Flash for a project I am working on and thought I would share. The cancel method is unimplemented and it is basically untested as yet.

Source as a FlashDevelop project available here:

http://github.com/evilsjg/hacks/tree/master/jsonrequest

Generalized string search improvement for needles with a small or numerically similar alphabet

I have been on a bit of a pointless optimization kick lately, and decided to see what I could do with string search. Most of the fast string search algorithms work on the principle of a sliding window for the purpose of skipping characters which don’t need to be checked. The best of these use a fair (fair being relative) amount of storage and extra cycles in the loop to make sure they are skipping as many characters as possible.

I am sure this has been done before, but I haven’t seen it. In the code below I have implemented an unintrusive extra level of skips to the well known Boyer-Moore-Horspool algorithm. Basically, each character of the key is AND’ed together and the result stored. If the result is zero, which happens if the alphabet is large/sparse enough, the extra checks are conditionalized away. In the event that the result is non-zero, we very quickly check for mismatches in the haystack by AND’ing the haystack character being checked against our previous result, and checking to see if the result of that is equal to our previous result. If the two results are equal, we have just checked a potentially matching character, and we need to fall back to our regular checking routine. In some cases we will match a non-matching character and our efforts will have been wasted, but in other cases we will have determined a non-match and be able to skip the full length of the needle in just a few instructions.

Original source for Boyer-Moore-Horspool lifted from Wikipedia.

boyer-moore-horspool.c

boyer-moore-horspool-sjg.c

Below are some quickie results from my X3220, compiled with GCC 4.2.1.

$ gcc -O2 boyer-moore-horspool.c -o boyer-moore-horspool

$ gcc -O2 boyer-moore-horspool-sjg.c -o boyer-moore-horspool-sjg

$ time ./boyer-moore-horspool

61

28

16

./boyer-moore-horspool 5.53s user 0.00s system 99% cpu 5.530 total

$ time ./boyer-moore-horspool-sjg

61

28

16

./boyer-moore-horspool-sjg 5.21s user 0.00s system 99% cpu 5.210 total

$ gcc -O3 -mtune=nocona boyer-moore-horspool.c -o boyer-moore-horspool

$ gcc -O3 -mtune=nocona boyer-moore-horspool-sjg.c -o boyer-moore-horspool-sjg

$ time ./boyer-moore-horspool

61

28

16

./boyer-moore-horspool 5.28s user 0.00s system 99% cpu 5.282 total

$ time ./boyer-moore-horspool-sjg

61

28

16

./boyer-moore-horspool-sjg 5.02s user 0.01s system 99% cpu 5.034 total

The, dare I say “elegant”, thing about this addition is that it could relatively easily be applied to many other string search algorithms and completely conditionalized away from the inner loop if the results are going to be ineffectual.

Virtual machine opcode dispatch experimentation

I was reading The case for virtual register machines recently and decided to do a bit of experimentation with different opcode dispatch methods. Apparently, up to 60% of the cpu time burned by common virtual machines is due to branch mispredicts. This is rather a silly problem to have in the context of opcode dispatch, considering the VM knows quite readily exactly where it will be branching to for each VM instruction. As a result, there is really no reason for the mispredicts apart from the fact that we can’t actually tell the cpu what we know. Since there is no useful mechanism of any sort (at least on all x86 cpu’s that I know of) to say to the cpu, branch at foo will go to bar (short of JIT’ing everything, which can indirectly solve the branch mispredicts which happen at the opcode dispatch stage), the best we can really hope to do is attempt to seed the branch predictor with past branch information that will hopefully prove useful in the future. This proves to be somewhat problematic, as different cpu’s have branch predictors implemented in different ways and with different capabilities, and varying mispredict penalties. You also tend to burn cycles and space over more direct implementations, you just have to find the algorithm that lets you come out ahead due to increased prediction accuracy.

To really figure out what is going to work best for a full blown VM, I think you need to start at the beginning. The paper above referenced a couple of different ways that opcode dispatch is typically accomplished, but I wanted to write my own test cases and figure out exactly what would work the best, and more importantly, what definitely was not going to work, so that I could avoid wasting time on it in the future. These are more important simply because the faster running algorithms will very likely be somewhat dependent on the number of opcodes a VM implements and the frequency with which it executes opcodes repeatedly or in the same order.

My preliminary test cases are on github here: http://github.com/evilsjg/hacks/tree/master/troa/test/dispatch, and the runtime results with various compilers on various CPU’s is here: http://github.com/evilsjg/hacks/tree/master/troa/test/dispatch/RESULTS.

As you can see, the “goto direct” version is the fastest in every case by a relatively healthy margin. To qualify these results I implemented the same method of dispatch as the goto direct case in the Lua VM. Much to my dismay it was consistently (~10%) slower than the switch-based dispatch that is standard in Lua. After quickly realizing it was purely a function of opcode count, 5 in my tests vs 38 in Lua, I modified my Lua patch to be more like the goto direct 2 example. Runtimes are not provided in the RESULTS file for this, but it was marginally slower than the goto direct case. After this, Lua was consistently faster (up to around 30%) on some of my test hardware, and marginally slower on others. Making minor changes to the breadth or depth of the nested if or switch statements expanded into each opcode had minor changes one way or the other on all processors tested. Typically, faster on my Xeon 3220 meant slower on my Athlon XP 2500+, and vice versa, but by differing magnitudes. The Xeon gets faster, faster than the Athlon slows.

The entry point to my post about this on the Lua list can be found here.

There is obviously performance to be had here, probably quite a bit of performance. My next bit of testing will focus on expanded (# of opcodes) versions of the faster test cases, with more realistic opcode distribution. In terms of algorithmic improvements, I am going to try grouping opcodes in various ways adding the group identifier to the opcode itself, so that the dispatch data structures can nest like switch (group) { case n: switch (op) { } * n }. I am also going to play with the concept of simple opcode or group ordering rules. The compiler frontend of any VM follows some set of rules, intended or not for generating the opcodes that the VM executes. Even with a VM implementation that does not enforce those rules, and allows opcode execution in any order, knowing the likely order will no doubt be useful for optimization.

A requirement in my mind early on for the TROA VM was the easy evaluation of expressions on vectors or streams in the language to make extensive use of SIMD possible inside the VM. This concept is being weighted right up to the top of my list after having done this opcode dispatch testing. Even in the basic unoptimized case where your opcode operates on its vector/stream serially, there is still potential for double-digit overall program performance improvement due to the reduction in opcode dispatches.

Object inheritance musings

class CProcess implements IController

class CThread implements IController

x = Controller(CThread).new()

interface IFDEvent

class Select implements IFDEvent

class Poll implements IFDEvent

class KEvent implement IFDEvent

class SocketServer extends FDEvent(Poll) [defines ISocketServer]

class HTTPServer extends SocketServer [defines IHTTPServer]

class FTPServer extends SocketServer [defines IFTPServer]

server = HTTPServer.new()

-or-

server = HTTPServer(SocketServer(KEvent)).new()

No? Where does this break down?